A.I.M.I. 1.0: a DRL for Continuous Control of Data Center Cooling

Dec 24, 2025

A.I.M.I. 1.0: A DRL for Continuous Control of Data Center Cooling

Modern data centers are complex environments faced with volatile heat loads, environmental factors, changing conditions, and variables that make it difficult for facility and energy teams to manage. With the onset of AI factories and workloads, this problem has transcended the data center, and now affecting grid operators and rate payers. There are methods to not only improve the efficiency of data centers but also reduce load growth so grid operators have time to manage the rise of AI factories. Data centers historically were managed by static or rule-based control mechanisms for their cooling, IT management, and power systems. As AI factories have come online, it has become apparent that these systems need to work holistically together, in unison, and cannot react to changes in IT load or environmental conditions, but need to predict changes and prepare and control setpoints to reduce peak events, inefficiencies, and the risk associated with cooling, power, and other failures.

Artificial Intelligence for Managing Infrastructure (A.I.M.I.®) provides a unique solution to connect data center infrastructure. From IT to cooling to power systems through an intelligent system that predicts and controls systems holistically. This article builds on prior Artificial Intelligence systems for data center management by training and experimenting with a Deep Reinforcement Learning Model specifically for continuous action spaces, A.I.M.I.® 1.0 or AIMI1, such as data centers. The model has shown in a mock data center lab environment (with production-grade equipment and through making real-time changes at various conditions) to not only improve the efficiency of HVAC systems, but also maintain efficiencies as heat load changes. This environment is complementary to the conditions of real-life production environments. The results of this paper and the AIMI1 model provide an introduction to a set of physical AI models specifically designed for AI Factories that can be deployed today to solve inefficient data center management and provide the grid with much-needed relief and reduction of risk from data center load growth.

How does A.I.M.I® 1.0 work?

A.I.M.I. 1.0 runs as a closed-loop reinforcement learning controller which operates at a fixed time interval. During operation, the model connects, predicts, and controls production grade data center equipment autonomously. In the accompanying paper, we demonstrate how the model controls a computer room air conditioning unit (CRAC), while learning from telemetry acquired from energy meters (IT and HVAC load), temperatures and humidity sensors (supply, return, rack level, ambient, plenum), and live equipment (CRAC, condenser, load bank, sensors, etc). The AIMI1 model reads asynchronous telemetry data and writes control outputs through SNMP. This data then informs the model of the thermodynamic and operational state of the environment as changes are made in real time.

The live environment produces a dynamic continuous state representation, and the policy network outputs continuous-valued control actions that manipulates the CRAC's setpoints, such as supply air temperature, fan speed, humidity, etc. These setpoints are bounded and penalized within temperature and energy constraints as referenced by ASHRAE TC9.9. AIMI1's actions are produced through a stochastic policy, learned while training, and developed through controlled exploration during the experiment. This ensures a stabilized policy that produces smooth and repeatable behavior that is safe to operate in mission-critical environments.

The AIMI1 model passes all control outputs through a safety layer that ensures actions are within the thermal and energy constraints, and then the safety layer predicts the output as a result of the action. If the action passes the safety layers constraints and estimation within a error buffer, then the AIMI1 model sends the actual control action to the live physical equipment. Constraints include manufacturer limits such as actuator bounds, PID loop time intervals, service level agreements (SLAs), rate of change limits preventing abrupt wear and tear on mechanical units, and requirements derived from data center industry guidelines.

Once control commands are set to the live equipment, in this case, an operational CRAC unit, the system waits for the duration of the control window to allow unit to report back the new setpoint values. After the control window ends, the AIMI1 model collects the updated telemetry data from the live equipment and calculates a scalar reward value based on the energy efficiency and safety targets. In this experiment, we focused on PUE reduction while incorporating penalties for temperature excursions, excessive compressor utilization, and other operational violations. More details on how the model functions is included in the accompanying paper.

The model has built-in fail-safes, such as reverting to the CRAC unit’s original controller if the model monitors any anomalies, telemetry dropouts, or violations of constraints. This failsafe mechanism does not interrupt the safe operation of mission-critical environments such as data centers. All model parameters and telemetry are periodically checkpointed to support monitoring and auditing through A.I.M.I.'s Data Center Dashboard, as showcased below:

Experimental Setup: Our Data Center Lab

All our experiments were administered in a Mock Data Center Lab that we built specifically to imitate the thermodynamic and airflow behavior of a small-scale commercial data hall. The Data Center Lab consists of a 10ft x 10ft x 8.5ft enclosure with two chambers, an upper chamber (hot air return plenum) and a lower chamber where all the equipment lives. The equipment in the lower chamber includes the following: Liebert PDX direct expansion (DX) Computer Room Air Conditioning (CRAC) unit, a programmable air load bank to mimic IT load, isolated in its own rack, and the lab’s IT equipment located adjacent to the load bank.

The lab containment was built using aluminum extrusions for its framing and steel brackets and sleeves for rigidity. For the casing of the containment, we used polycarbonate panels, and for the entry way, we used a commercial-grade vinyl PVC smooth plastic strip curtain. This allows for easy access into the containment while maintaining airflow separation with the outside environment in the warehouse. This construction overall provides semi sealed with a thermally stable boundary that supports airflow and realistic pressure differentials.

Now the CRAC unit is placed such that the cold air is pushed vertically downward and spreads through the rest of the containment, racks, etc. The cooled air being drawn by the load bank is heated and rises to the upper chamber (Hot air return plenum) through a small opening (channel) in the ceiling of the lower chamber. The hot air in the plenum is fed into the CRAC's intake. Due to this separation of chambers, most of the hot air does not mix with the cold air, and thus, the CRAC receives close to the exact output of the load bank rack and IT rack. This configuration reflects common containment in most architectures of small to medium-sized data centers. This non-ideal configuration of airflow and containment is commonly found in real data centers, reinforcing the limitations of static and purely reactive control approaches.

The load bank is configured to draw air from within the containment and exhaust heated air towards the back of the server rack. The heat output is controlled through (medium and high) load bank regimes, allowing for repeatable low, medium, high, and peak thermal loads that are representative of commercial data center workload changes.

Experimental Protocol

The experiment primarily had two states with variations in between. A controlled baseline, CRAC on auto mode, and Autonomous control using A.I.M.I. 1.0. These experiments were logged across multiple days under comparable conditions with variations in the load bank heat regimes that transitioned between low, medium, high, and peak.

For each run, we initialized the CRAC in the units' native auto mode to allow it to stabilize. Once stabilized, we measured and verified all instrumentation and data being acquired for that given day before applying the load. In the Autonomous control using A.I.M.I. 1.0, the automation was only enabled after the baseline had stabilized to ensure comparable initial conditions between the control modes.

Each experiment was conducted over several hours and around the same time of day to ensure a steady state of behavior, transient responses to load changes, and long-term control stability. When the A.I.M.I. was on, we only exported data after turning off the automation to avoid partial or inconsistent readings of the data.

Primary Metrics

The performance of these experiments is evaluated using metrics derived directly from measured telemetry rather than inferred model states. The experiment's primary metrics are measured in the following way:

HVAC Energy Consumption (kWh): The Integral of measured CRAC electrical power

Total facility energy consumption (kWh): The summation of all power from the panel

Power Usage Effectiveness (PUE): Defined as ‘Total Facility Energy’ divided by ‘IT energy.’

Temperature (F): Supply air, return air, rack level location, as well as outside temperature.

Thermal Stability: Quantified using temperature variance and oscillation metrics

Response Time: the delta in time following step changes in IT load

Results: AIMI1 vs original PID controller at Medium Load

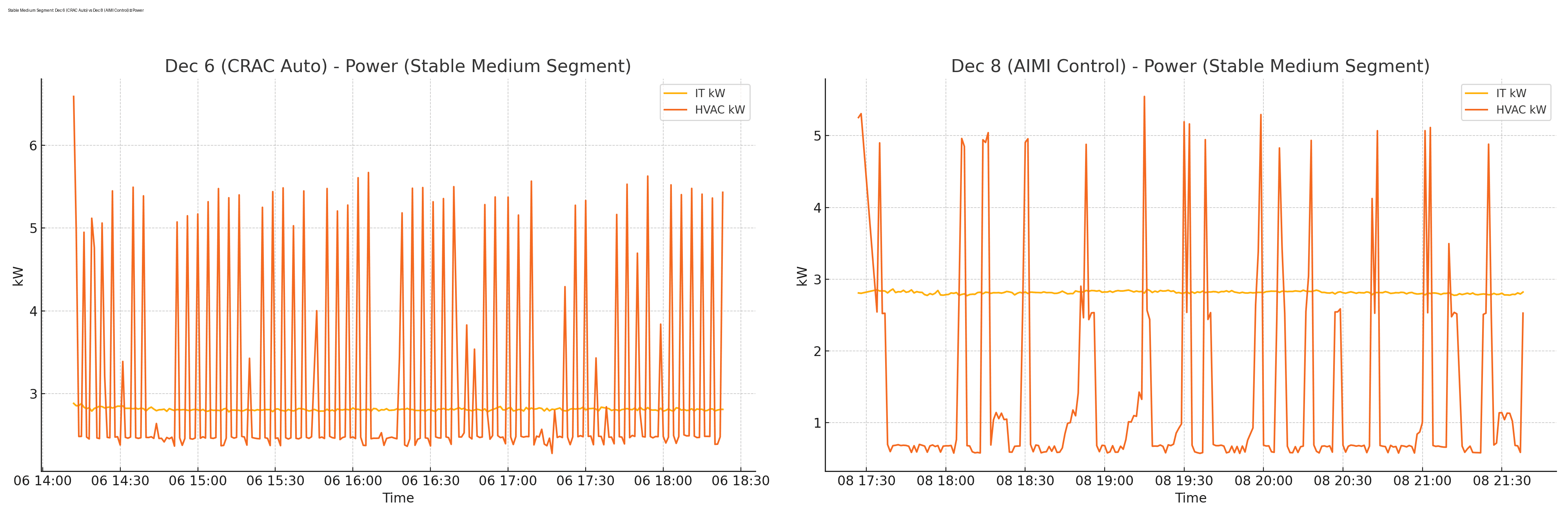

This experiment compared the original PID controller vs AIMI1-driven control of the CRAC unit at various load bank settings (medium, high, peak). The comparisons are visualized below, from power meters, reading energy from the HVAC, load bank, and all facility equipment. Readings also included temperature and humidity (labeled COM sensors) located throughout the data center containment.

At the medium load regime, comparing separate 4-hour test runs between the original PID controller and the AIMI1 model, the results show a 53.6% reduction in HVAC energy consumption, with AIMI1 utilizing 1.44kW and the original PID controller consuming 3.08kW during the experimental run. AIMI1 chose to reduce compressor usage, while primarily tuning fan speed as zone temperatures increased, only kicking on compressor utilization when multiple zones reached the high threshold of 27 °C. The original PID controller utilized the compressor more often, as the return air temperature setpoint was maintained at 26 °C during the original PID controller experimental runs.

Medium Load Regime: Power Comparison (Original - left | AIMI1 - right)

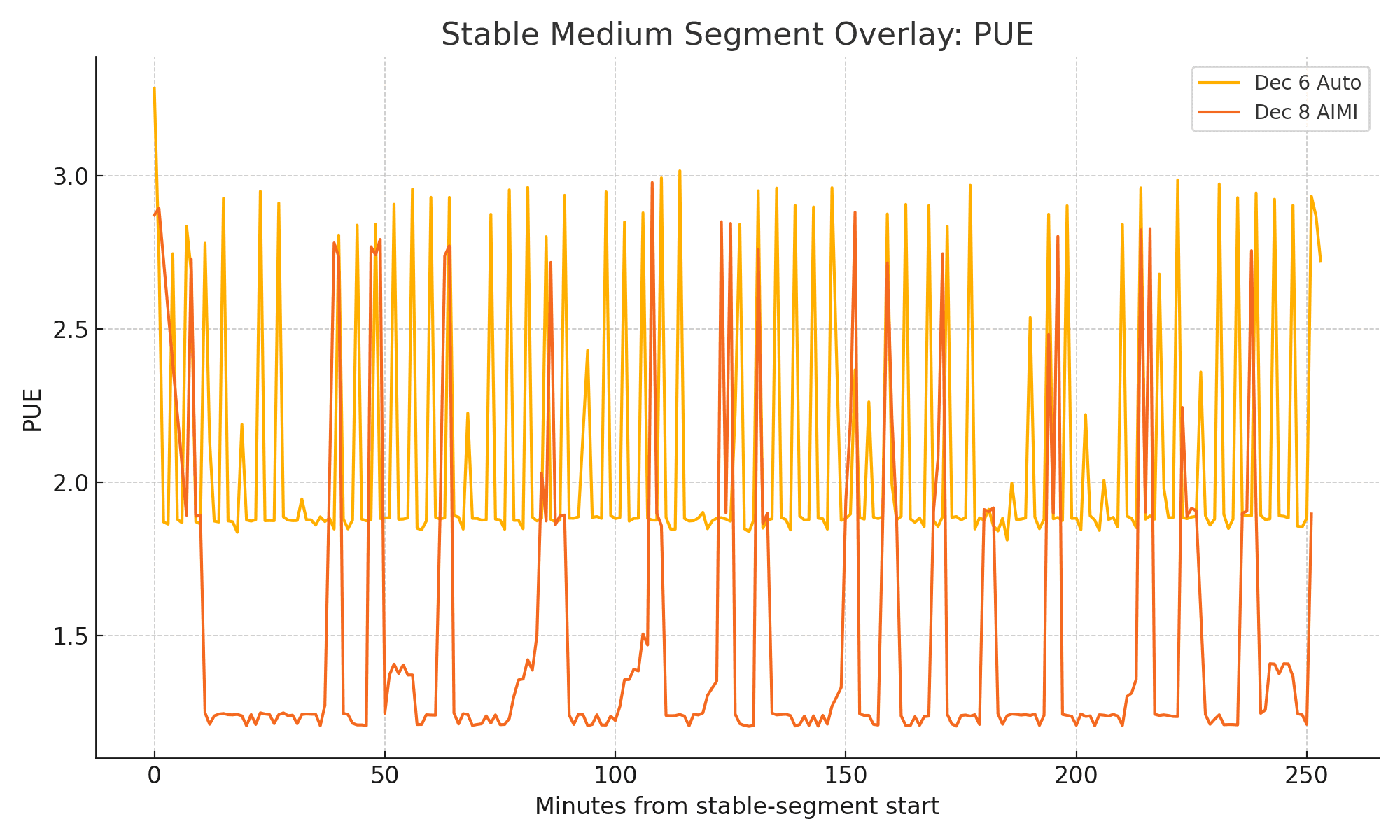

PUE reduction of 28% during the AIMI1 model’s operation.

Medium Load Regime: PUE overlay

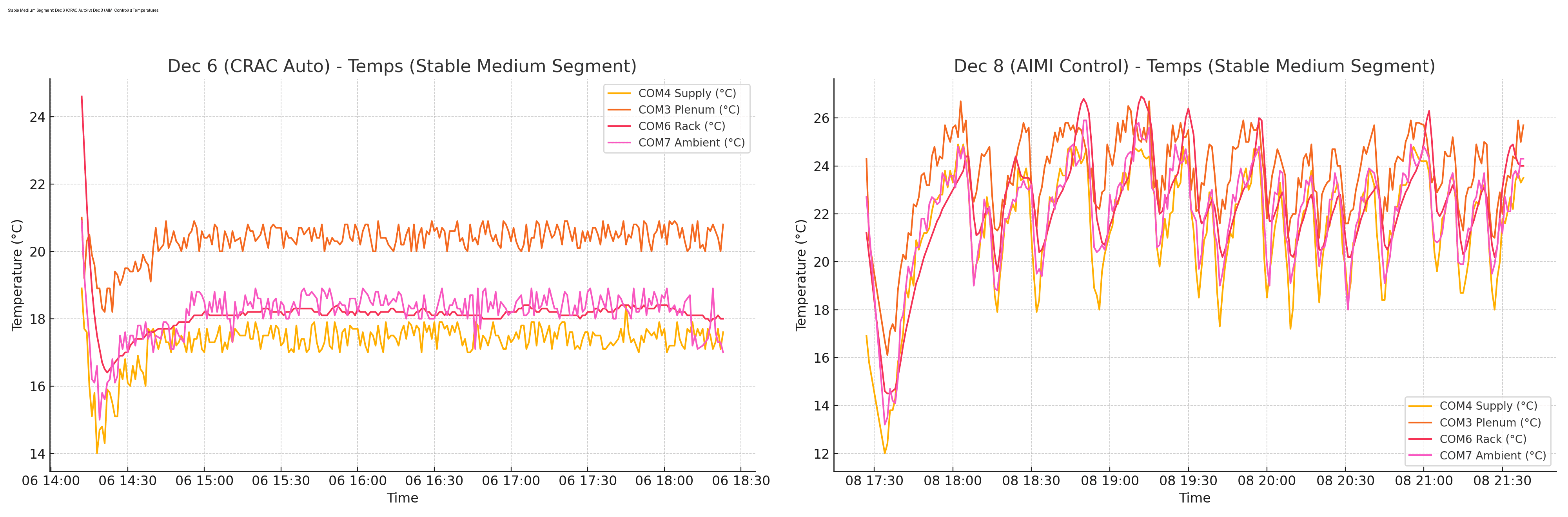

In the context of data centers, temperature is one of the critical variables or states that provides context for how dynamic a continuous action space can be, Below are the Zone Temperature Comparison results between AIMI1 operating the CRAC unit vs the unit’s native PID controller. The findings show how the AIMI1's DRL model focused on keeping all temperatures under the 27 °C constraint while also optimizing for its reward function of an optimal PUE value. This caused temperatures to rise holistically towards the constraint, until the model decided to turn on compressors to minimize excursions. What makes the model different is how it learned to orchestrate all temperature zones into its policy, while the original PID controller optimized its logic based on the sole return air temperature reading.

It’s important to note that the COM6 sensor measured the Rack Exhaust Max temp, which showed a 2.3 °C+ higher mean reading during AIMI1’s operation vs the original PID controller.

Medium Load Regime: Zone Temperature Comparison (Original - left | AIMI1 - right)

Prior use of RL in data center automation vs AIMI1

In the prior art, researchers used a predictive modeling methods to optimize data center cooling setpoints. This method uses an accurate model to predict future states before taking action. This is optimized for a safe approach to mission-critical data center environments where SLA excursions can lead to downtime, loss of revenue, and mechanical failure. However, the model might not perform well in environments where states are changing often, such as the modern data center environment with AI workloads, fragmented equipment, and diverse action spaces.

Researchers also developed a simulation-based RL that learns virtually by emulating the physical environment digitally, then recommends and takes actions on setpoints while comparing them against actual values or simulated values if the model was not taking action. Although this model improves on the framework of safely taking actions in live environments, through derisking by running simulations that mimic the real environment. One can argue that the restriction of the model relies on the accuracy of the simulation model and how quickly a simulation can be produced for edge cases or phenomena experienced in the real world.

FLUIX introduces the AIMI1 model, a Deep RL-based model that learns by making changes in continuous action spaces, such as live data center environments. The model has heavy guardrails for safety, reward functions, and feedback loops to ensure usability and reliability in production environments. The model expedites learning through safe exploration with multiple actions taken in real time. The quality of learning by experiencing states from actions taken in real time. The feedback loops can be almost instantaneous. Although the model can be well-suited for modern data center environments (as shown results above), it does take time preparing the safety guardrails, testing failsafes, and taking precautionary measures to ensure the uptime of mission-critical environments. More information about prior work can be found in this accompanying paper.

What happens next?

Our work enables future strides in autonomous controls and improvements in data center management. FLUIX is working on deploying agents such as the AIMI1 model with increased awareness through data retrieved from workload schedulers, GPU telemetry, and networking to allow the model to learn from token creation, jobs scheduled, and chip-level heat output, which provides higher fidelity prediction on server volatility. By training on this data and learning from the initial job scheduling, the AIMI class of agentic models is learning to associate spikes in IT energy and chip temperatures with specific tasks. We denote this model A.I.M.I.® 2.0 or AIMI2.

Early results of the AIMI2 model, which we will be releasing in 2026, show that the model can predict spikes from job scheduling faster than the AIMI1 model of holistic sensor ingestions by 2x and 10x faster than the original PID controller, which reacts to the heat load increase when it's too late, once it’s reached the return temperature sensor. The AIMI2 model offers a practical method of improving not just cooling efficiency, but also reducing stress on power systems, and more efficiency in GPU orchestration by holistically managing job allocation to server-level resources, down to cooling availability, and power stability. Leading industry data center operators have highlighted that AI workloads are linked to various execution phases with tight tolerances surrounding coupled power draw and thermal behavior, which can lead to transient peaks, throttling of chips and electronics, and residual heat events that can propagate into overall cooling systems. AIMI2 will provide the first holistic management of agents from cooling, power, and IT in live production environments. In future work, we plan to release results from the AIMI1 and AIMI2 models deployed within live production data centers and major utility partners.

Access to the accompanying technical white paper is available by submitting an Interest Form here.

FLUIX AI is hiring! If you'd be interested in joining us, reach out!

For data center operators, industry experts, or researchers interested in our work, please write to: info@fluix.ai