Why Air-Gapped & On-Prem AI Makes Sense for Data Center Control

Jan 6, 2026

Why Air-Gapped, On-Prem AI Makes Sense for Data Center Control

When software controls physical infrastructure, architectural mistakes have immediate consequences. Cooling systems do not fail gracefully. Power systems do not wait for retries. In a data center, control software becomes part of the facility itself.

That reality guided our earliest decisions at FLUIX. Before pursuing SOC 2 Type II compliance, we had already committed to running control systems on-prem and, where required, air-gapped. The audit came later. It mattered because it confirmed that our controls were not just well designed, but enforced consistently under live conditions. Compliance matters. In data centers, safety is an architectural outcome.

Control Software Lives in a Different World

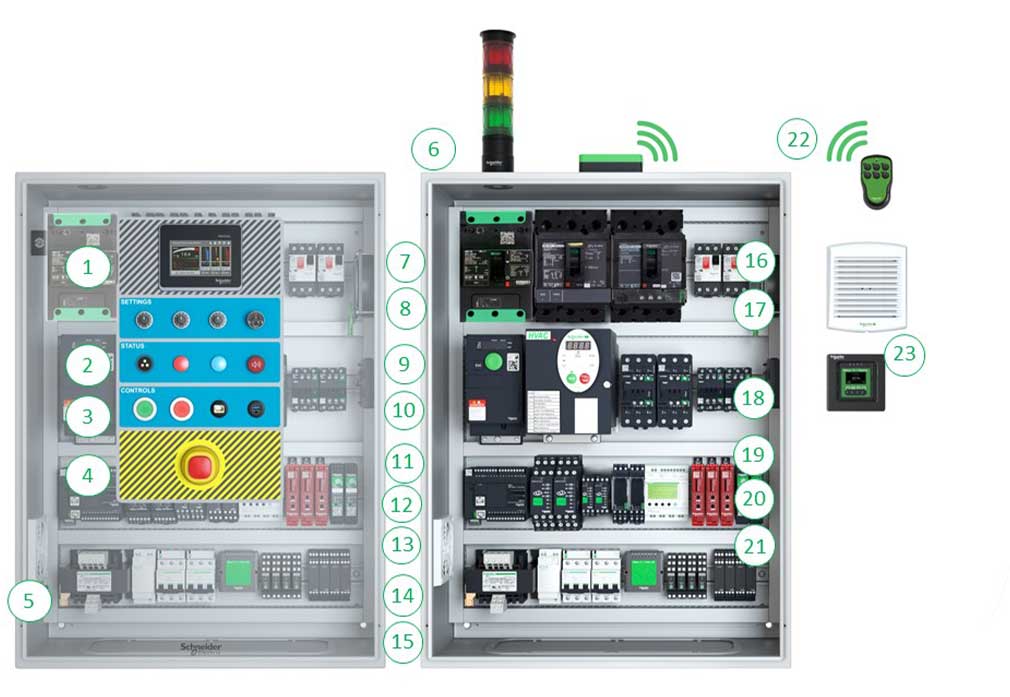

Most modern data center software exists to observe. It collects telemetry, visualizes trends, and suggests actions. That software can live almost anywhere because it does not touch the physical state of the facility. Control software is different.

When a system can adjust cooling setpoints, fan speeds, or compressor behavior, it becomes part of the facility itself. It inherits responsibility for uptime, hardware health, and operational stability. This is not an abstract distinction. It’s one you feel the moment something goes wrong.

“I started my career obsessed with the physical limits of data centers, working directly on cooling hardware inside high-security facilities. That experience made one thing clear: you can’t solve a dynamic, system-wide problem with static solutions.”

— Abhi Sastri, CEO, FLUIX

That perspective shaped how we approached control from the beginning.

Why Remote Control Expands Risk

Cloud connectivity adds flexibility. It also adds risk. Each external dependency becomes something the operator no longer fully controls. In a data center, those risks are physical. Bad control decisions can push temperatures out of bounds, throttle compute, or force shutdowns. Over time, they can shorten equipment life.

During internal testing, we asked a basic question: what happens if a control loop fails to respond during a rapid load ramp?

The answer was clear. GPUs throttle. Safeties trip. Recovery takes hours. That was enough to settle the question. Critical infrastructure has learned this lesson repeatedly. Industrial automation systems and utilities favor locality and isolation because failure carries real consequences. Air-gapped systems are harder to deploy and harder to maintain. They demand discipline and clear ownership. They don’t scale the same way cloud services do. Those trade-offs are real. And they are intentional. Convenience was never the goal. Predictability was.

How This Shaped A.I.M.I.® 1.0

By the time A.I.M.I.® 1.0 took shape, the architectural direction was already set.

It runs locally.

It can operate air-gapped.

It fails back to native controls cleanly.

Learning, decision-making, and safety enforcement all occur within the facility boundary. Control actions are governed by explicit guardrails and rate limits that reflect the realities of mission-critical infrastructure.

“We built A.I.M.I. to operate in the real world of continuous, physical action—not in a clean simulation. It learns the physics of a building and stays inside hard safety boundaries.”

— Chase Overcash, CTO, FLUIX

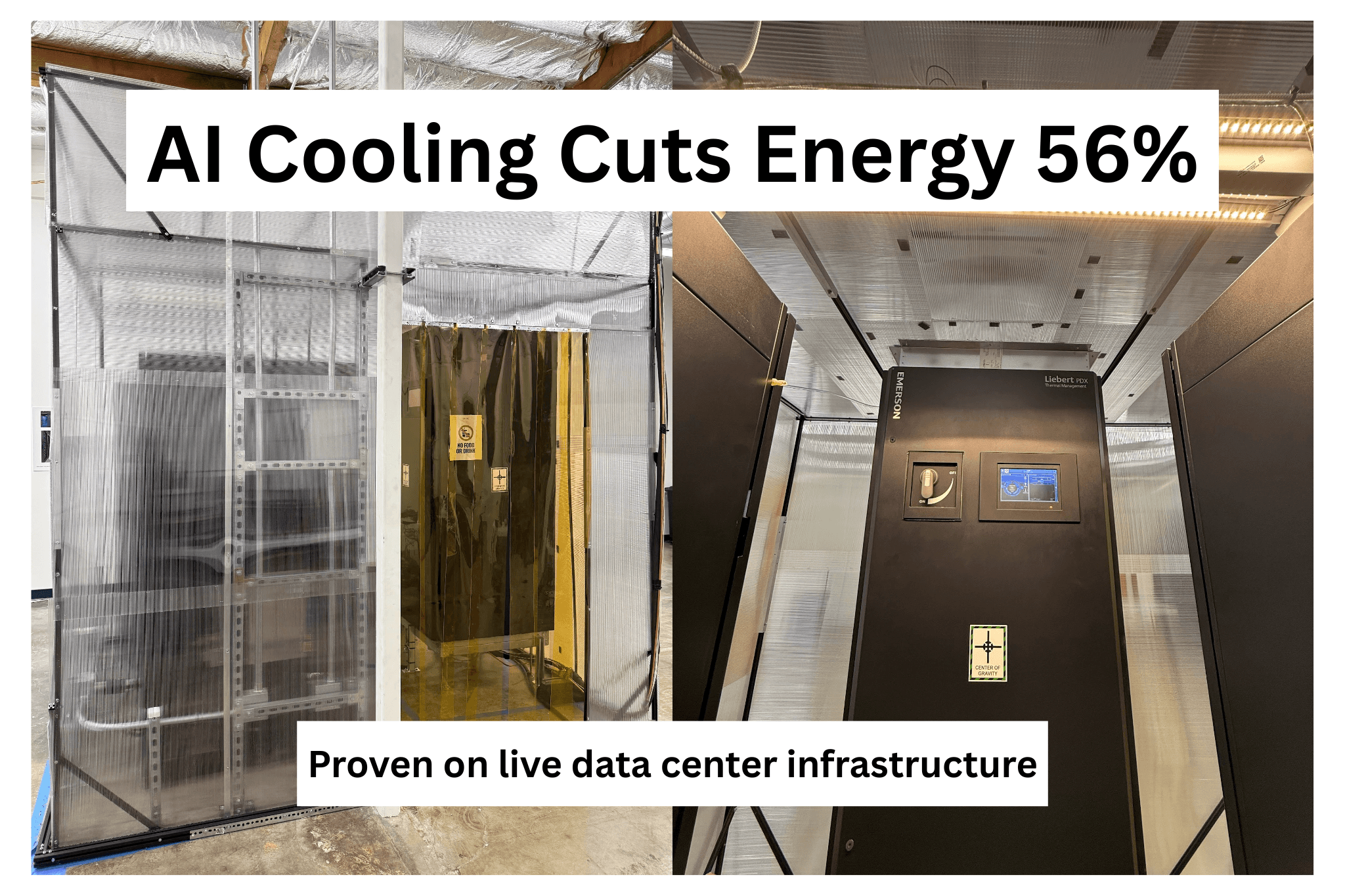

That constraint made development harder. It also made testing real. It’s why A.I.M.I.® was evaluated on production-grade equipment rather than idealized environments, and why measured results reflect live operating conditions.

As AI systems take on more responsibility in physical environments, expectations will rise. Operators will ask harder questions. Regulators will demand clearer failure modes. Investors will look beyond feature sets and focus on risk. In that environment, architecture matters as much as performance.

For data center control, air-gapped, on-prem AI is not a conservative stance. It’s a deliberate one. It’s where we chose to start.

Read the A.I.M.I.® 1.0 Technical White Paper

This article outlines why we chose an air-gapped, on-prem architecture for control software. The accompanying white paper goes deeper.

It details the A.I.M.I.® 1.0 system design, safety model, experimental setup, and measured results from live operation on production-grade data center equipment. If you are evaluating autonomous control in real facilities and want to understand how the system behaves under load, you can read the full technical paper here: